MeTACAST: Target- and Context-aware Spatial Selection in VR

Lixiang Zhao, Tobias Isenberg, Fuqi Xie, Hai-Ning Liang, Lingyun Yu

DOI: 10.1109/TVCG.2023.3326517

Room: 106

2023-10-25T03:36:00ZGMT-0600Change your timezone on the schedule page

2023-10-25T03:36:00Z

Fast forward

Full Video

Keywords

Spatial selection, immersive analytics, virtual reality (VR), target-aware and context-aware interaction for visualization

Abstract

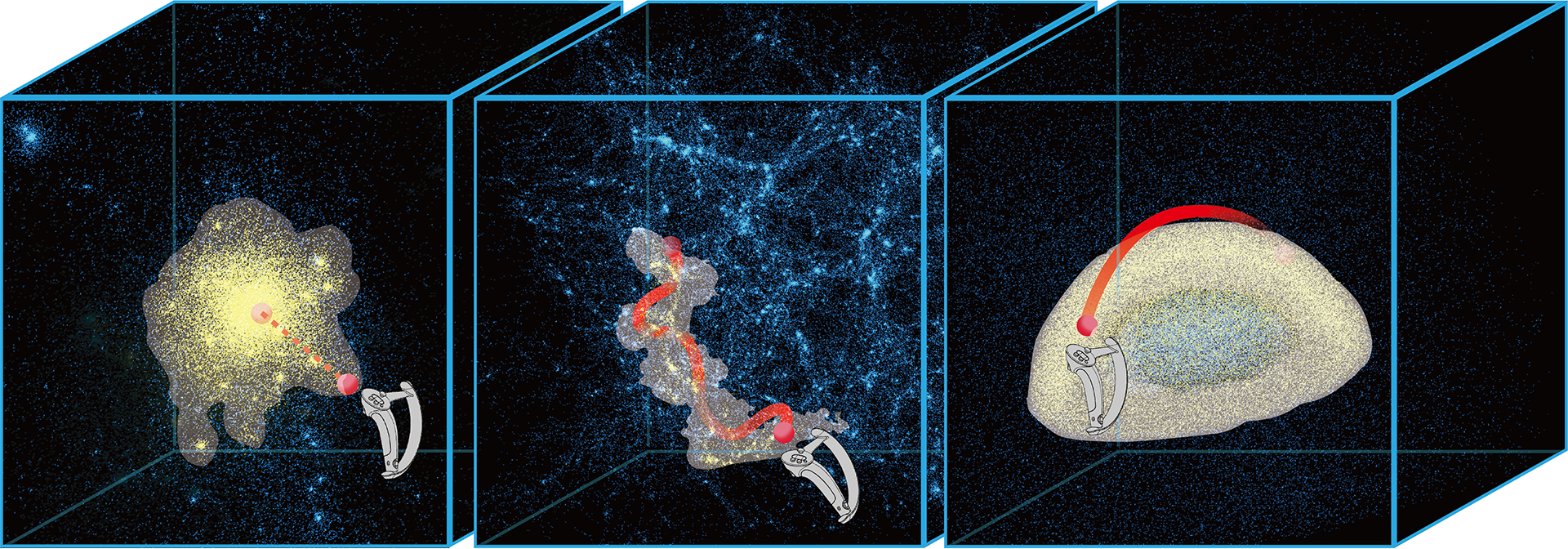

We propose three novel spatial data selection techniques for particle data in VR visualization environments. They are designed to be target- and context-aware and be suitable for a wide range of data features and complex scenarios. Each technique is designed to be adjusted to particular selection intents: the selection of consecutive dense regions, the selection of filament-like structures, and the selection of clusters—with all of them facilitating post-selection threshold adjustment. These techniques allow users to precisely select those regions of space for further exploration—with simple and approximate 3D pointing, brushing, or drawing input—using flexible point- or path-based input and without being limited by 3D occlusions, non-homogeneous feature density, or complex data shapes. These new techniques are evaluated in a controlled experiment and compared with the Baseline method, a region-based 3D painting selection. Our results indicate that our techniques are effective in handling a wide range of scenarios and allow users to select data based on their comprehension of crucial features. Furthermore, we analyze the attributes, requirements, and strategies of our spatial selection methods and compare them with existing state-of-the-art selection methods to handle diverse data features and situations. Based on this analysis we provide guidelines for choosing the most suitable 3D spatial selection techniques based on the interaction environment, the given data characteristics, or the need for interactive post-selection threshold adjustment.